Is the World Ready for Google Glass Now? (No.)

10 years later, the future is wearing shades again.

Welcome back to Social Signals

This week we're talking about smart glasses and what we can learn from Google Glass. I made an AI-generated short film with Google Veo 3 (ahem, Faxzilla LIVES!!). And I got my irises scanned by World for digital identity verification in LA this week and wrote about the experience.

👇 For paid subscribers this week:

📊 Chart of the Week: How CMOs are using influencers to build trust + drive revenue

💼 AI Mandates Report: Which brands are really doing the work, and which are just peacocking

⚡ 19 Social Signals I’m Tracking: From AI-optimized YouTube ads to holographic drones bringing ads to the sky everywhere.

Let’s get into it. Keep going! 🚀✨

Greg

Is the World Ready for Google Glass Now? (No.)

10 years later, the future is wearing shades again.

Remember when the idea of carrying a phone everywhere sounded ridiculous? Like, who needs email in their pocket at all times? And yet… here we are, checking our screens 300 times a day.

So when I say we’ll eventually leave our phones at home and wear smart glasses as our primary device, it might sound funny now. But so did smartphones: right up until they weren’t. The future always feels far-fetched... until it's just what we do.

More than a decade ago, I was one of the first to wear a computer on my face. Not metaphorically, but literally. As an early member of the Google Glass Explorer Program, I wandered the world with a heads-up display hovering just above my right eye, fielding side-eyes from strangers and questions from friends like: “Wait, are you recording me right now?” and “Can you please take those off?”

And honestly, fair questions.

It was early days. We didn’t know that cameras would end up everywhere. Not just on our faces, but in our doorbells, dashboards, and even our fridges. We didn’t know that talking to an AI assistant would become more normal than calling a human.

But many of us did think definitely believe companies like Meta, Google, Apple, Amazon, and OpenAI would all be racing to build a screenless future powered by ambient intelligence.

That’s part of what led to my quote in Julio’s story…

“Rather than shun and fear-monger away something I don’t understand, I’d rather spend my time experimenting and innovating around it.”

Back then, Glass felt like science fiction. Today, it feels like the rough draft of something inevitable.

At one point, I even figured out how to send a fax through Glass. Yes, a fax. Why? Because that’s what innovation looked like in the early 2010s: clunky, ambitious, and just a little ridiculous. But I believed in the potential, not just the prototype.

Fast forward to 2025, and Google’s giving smart glasses another go. This time with actual momentum and modern AI muscle behind it.

And folks are here for it? Look at this Fast Company headline: Google’s second swing at smart glasses seems a lot more sensible.

At Google I/O, we saw Android XR prototypes showing off live translations, camera-enabled real-time search, and Gemini Live integration. It’s a much more confident take on the “your face is the interface” future. Read my take on this here (reminder: paid subscribers to Social Signals have access to the full archives. Upgrade your subscription here).

From Glassholes to POV Heroes

Remember the Glass backlash? People wearing the device were dubbed “glassholes.” That came partially because of privacy concerns (there was no clear indicator when it was recording), and partially because... well, early adopters can be intense, foolish, and Google didn’t do any PR around this weirdo tech bomb they dropped on society.

Google Glass prioritized utility over fashion. It was expensive, dorky, and it didn’t seem to solve a problem despite lots of signals of where it could.

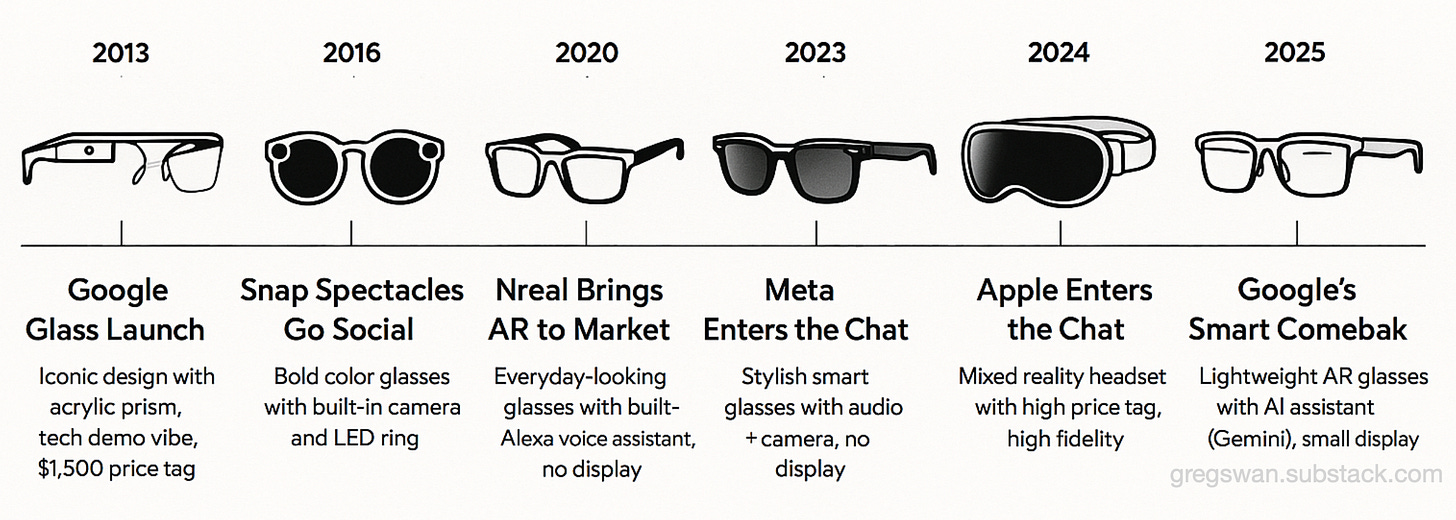

Meanwhile, when Snap released Spectacles, they leaned hard into aesthetics, privacy, and accessibility: hot colors of 2016, a built-in recording light, and a $130 price point. That contrast said a lot about how the market perceived wearability vs wearable tech.

But today, with the rise of ambient computing and generative AI, utility is getting cool again.

Google’s XR glasses aren’t arriving in a vacuum. Meta’s Ray-Ban smart glasses are already in the wild. Apple has planted its spatial computing flag with the Vision Pro. Samsung and Google are teaming up on Project Moohan. And then there’s Project Aura from Xreal, aiming to turn your glasses into a personal movie screen.

Apple, Jony Ive, and the Ambient AI Race

Apple is in this game, too. Apple’s rumored plan to launch AI-powered smart glasses by the end of 2026 isn’t about rushing to catch up: it’s the same playbook we’ve seen time and time again. From the iPod to the iPhone to the Vision Pro, Apple waits for a product category to simmer before it swoops in with something polished, premium, and paradigm-shifting.

The Vision Pro might have launched as a developer unit, but it showed Apple’s hand: it’s not just building XR hardware; it’s building the future of human-computer interaction. With glasses next on the roadmap, the expectation isn’t just elegance. It’s usefulness. And ecosystem integration. And that classic Apple “oh wow” moment.

But while Apple waits, OpenAI is already sprinting.

OpenAI’s collaboration with legendary designer Jony Ive (you know, the guy behind the iPhone, iPad, and MacBook) is one of the most intriguing wildcard entries in this race. Their goal? To build a post-smartphone device for ambient AI interaction. Something screenless. Something intuitive. Something... delightful.

“What it means to use technology can change in a profound way,” Sam Altman said. “I hope we can bring some of the delight, wonder and creative spirit that I first felt using an Apple Computer 30 years ago.”

Whether this becomes a pair of glasses, a wearable pin, or something entirely new, it signals a sea change in how we’ll engage with AI. The interface won’t be an app. It’ll be everything around us.

Here’s Foursquare founder ’s take on what Altman and Ive are cooking up for this AI assistant hardware…

Early AI Assistant Tech

It reminds me of my Humane AI pin, my Limitless AI pendant, and my Bee Pioneer — all in one.

AND! It could be we’ll have some combination of neural interface tech like in Meta’s Orion system and the Mudra band that was a favorite of mine at CES the last two years on your wrist, some kind of smart glasses, and some kind of wearable all working in concert to replace (and improve) on the phone you carry today.

While we’re waiting to see what this device will be, the march to glasses continues. I actually think smart glasses may be more realistic than using three devices to replace a phone. Give me one device to replace my one device please.

So back to Google Glass: What’s Changed in 10 Years?

Just a few things:

Design: Today’s prototypes actually look like something you’d wear in public. Ray-Ban and Warby Parker are involved now.

Privacy: There’s more transparency (hello, LED indicators) and cultural readiness around being “always on.” And I think the most affluent and educated are really skeptical of who has that data and how it’s being used, but many folks are still woefully uninformed of how data + ads work. As long as the utility is there…

Utility: AI makes these glasses useful in real time. Translating signs, summarizing articles, answering questions about the painting you're staring at in a museum, having access to your calendar, contacts and email, and more. Solve problems and the tech sells itself.

Connectivity: Whether tethered to your phone or powered by their own cell signal, they’re no longer standalone gadgets with limited functionality. They're extensions of the AI assistants we already use.

Phone Addiction: We don’t feel good about how much we’re scrolling. We want to be heads-up and more present. But the technology isn’t there. Yet. Glasses give us both.

The Real Hurdle: Culture

Here’s the thing: the technology might be (closer to) ready, but culture is still catching up.

Google Glass flopped not just because of its price or limited features, but because society wasn’t ready for people wearing always-on cameras in public. It was weird. It was invasive. It raised questions no one had good answers for yet. As mentioned, Google didn’t do it’s job on PR.

Fast-forward to today, and those questions are still around, but they feel slightly less uncomfortable. We talk to our phones in public. We wear AirPods everywhere. Some of us even wear smart rings (I’m in year two of my Oura ring and love it). We’ve become more accustomed to tech blurring the line between personal and public.

Still, the leap to glasses as a primary interface is a big one. The devices have to blend in. The features have to be genuinely helpful. And the experience can’t feel like a Black Mirror episode.

Mass adoption won't come from specs or novelty. It’ll come when the glasses solve real problems in non-weird ways.

So... Are We Ready for Google Glass Yet?

No, not quite. But we’re getting closer.

We’re seeing the platform wars forming in real time: Meta, Apple, Google, OpenAI, Microsoft, Amazon and others are all building in the same general direction, but with very different visions. We’re still a few killer apps and price drops away from widespread adoption. But this time, unlike 2013, it’s not just about curiosity or cool factor.

Final Thought:

I’ve seen this story before, and I still believe in the plot. The future is getting a new lens. And this time, we might just be able to see it coming. I’ve got puns!

Will the future look like Keiichi Matsuda’s short film, Hyper Reality (2016), experienced through smart glasses? We’ll find out soon enough.

📌 RELEVANT LINKS from the Social Signals Archives:

The most successful Social Media ads are going to be in Augmented Reality (2021)

Exploring the Tech Trends of SXSW 2024: A Journey into the Future (2024)

Faxzilla Returns! 📠🦖

I spent some time experimenting with Google’s new AI video tool called Veo 3 with experimental audio. This is 8 short videos all generated via text prompts, edited together with CapCut, and the result was made in less than an hour.

FAXZILLA!!! Also, this is my real fax number if you want to fax me what you think of this. 📠

🫀 Good News: I’ve Verified My Humanity.

Last month I wrote about the coming identity crisis online: where AI-generated content is indistinguishable from real humans, and the need for a new kind of infrastructure to prove we’re… well, us.

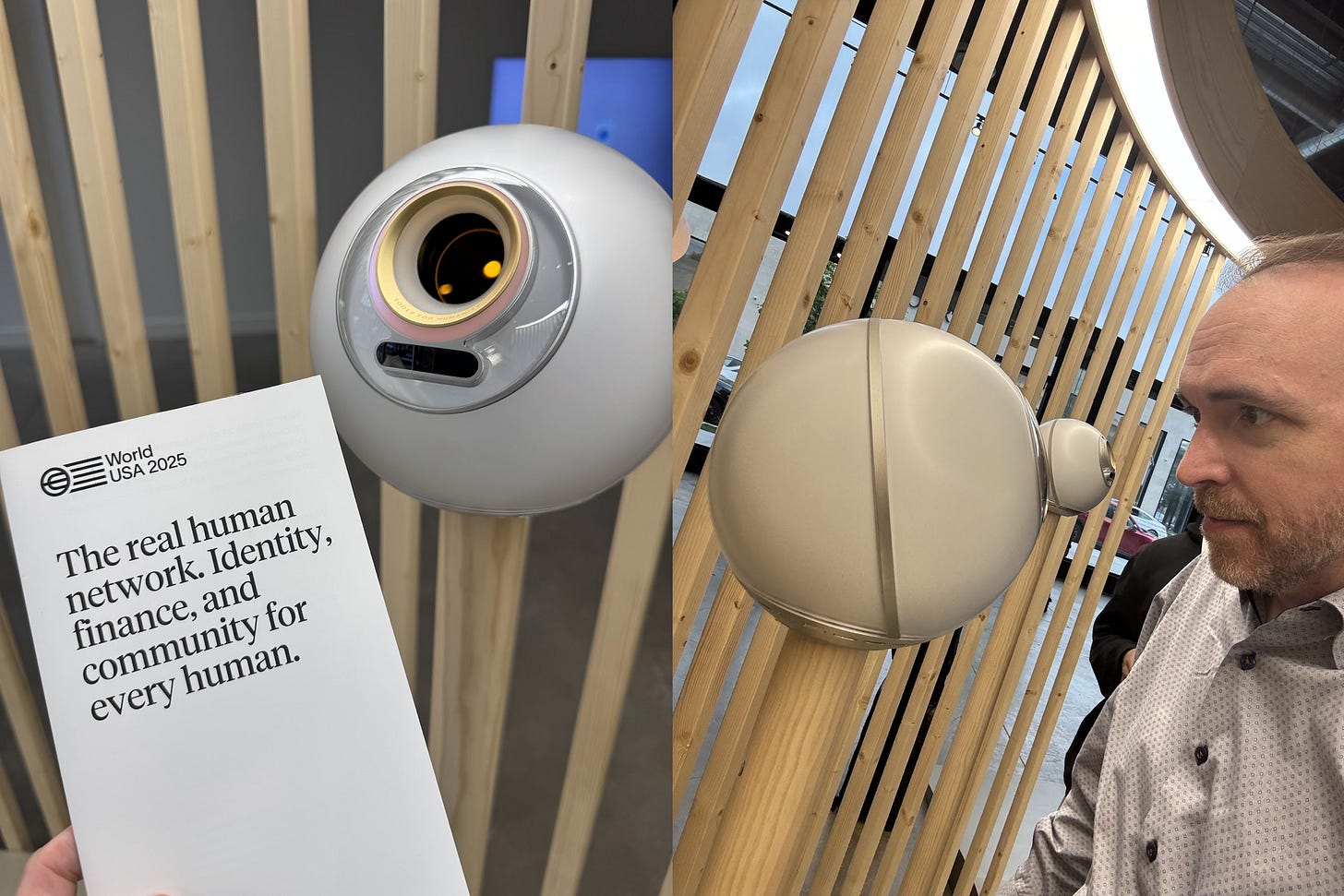

This week I went to World’s new LA flagship location and got my irises scanned. Yes, seriously.

It was simple. There were 5 people working there and they told me they get about 20 folks who come in each day. Most of that is walk-by traffic on Melrose there (expensive real estate!), and it was safe to say I was the only person to take a 45 minute Lyft lugging a carry on suitcase around West Hollywood just to get my rises scanned.

The entire process took about 3 minutes. Get on the wifi. Open the app. Scan a code. Quit taking selfies because you’re making it time out, Greg. Get scanned. Wait for your confirmation. The whole time I was asking questions and taking pictures.

Because there’s more than just World’s pop-up here…

World’s Orb isn’t just scanning eyeball: it’s scanning for solutions.

Their bold bet? Biometric “proof of personhood” stored on the blockchain: a World ID you can use to prove you're a unique human, not a bot or synthetic persona. It’s like CAPTCHA, evolved for the generative AI age. And they already have partnerships with Visa and Match/Tinder.

I’m not endorsing this as the answer. There are still big questions:

🧠 Why won’t they let me ever delete my iris scan from their system?

🛡️ Is your iris scan the most private part of you? Was it worth $42 in crypto?

💸 When does humanity verification become pay-to-play?

😳 How do we feel about OpenAI’s leadership both breaking what is real in 2025 and also monetizing it?

But here’s what I do know:

Verifying real humans online is no longer optional. It’s critical infrastructure.

As generative AI accelerates, we’re going to need new ways to rebuild trust, reduce fraud, and make sure people (ahen, actual people) aren’t erased in the noise.

I got my eyes scanned. Would you? Read more from last month: Proving you’re a real human online will be a problem from now-on, so what do we do about it?

And on my flight home I learned the Orb is on the cover of Time Magazine this month… maybe this will bring more than 20 folks a day in to get scanned?